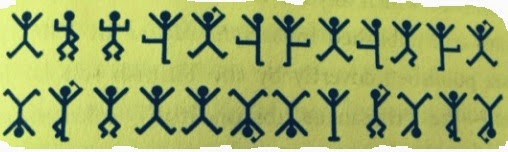

The Dancing Men

In 1903, in southern London, a serial killer was on the loose,

with already five murder cases confirmed and attributed to the perpetrator.

Police wasn’t getting any closer to stopping him, or her. The serial killer was

codenamed “The Dancing Killer” for the simple fact that he (or she) used to

leave funny encoded notes depicting a set of “dancing men” by their victims’

bodies. No one was able to decipher those messages. After the killer’s last

attack, the following message was recovered:

We can start by assuming the symbols in the note map to English letters, which would be the simplest mapping possible. We can also assume that the “flags” in the dancing men’s hands are non-interesting artifacts (a huge assumption, but just bare with me for now), so we’ll ignore them.

The approach will be a brute-force DFS (Depth-First Search) with massive branches pruning based on a dictionary tri. We’ll first map the encoded message to a computer-understandable format, such as the following:

Lov à prefix

Love à word

elsie prepare to meet thy god

elsie prepare to meet thy job

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.IO;

using System.Collections;

class Util

{

public static Hashtable htWordsTri = null;

public static int maxWordLength = 0;

if (String.IsNullOrEmpty(dictionaryFile) ||

!File.Exists(dictionaryFile))

{

Console.WriteLine("Unable to read dictionary file: '{0}'. Please check that the file exists.", dictionaryFile);

return;

}

htWordsTri = new Hashtable();

Random rd = new Random();

int numberOfWords = 0;

string word = srDictionary.ReadLine().ToLower().Trim();

maxWordLength = word.Length;

}

{

string triEntry = word.Substring(0, i);

if (i == word.Length)

{

htWordsTri[triEntry] = true;

}

}

else

{

htWordsTri.Add(triEntry, i == word.Length);

}

}

numberOfWords++;

Console.WriteLine("Processed: {0} words, {1} tri entries", numberOfWords, htWordsTri.Count);

}

}

Console.WriteLine("Total: {0} words, {1} tri entries", numberOfWords, htWordsTri.Count);

srDictionary.Close();

}

}

}

}

A brilliant detective was actually able to crack down this

last message, leading to the apprehension of the assassin. In 1903 there was no

computers around. Thankfully, that’s no longer the case. We can solve this

puzzle with computers now, and that’s what this post is about.

We can start by assuming the symbols in the note map to English letters, which would be the simplest mapping possible. We can also assume that the “flags” in the dancing men’s hands are non-interesting artifacts (a huge assumption, but just bare with me for now), so we’ll ignore them.

The approach will be a brute-force DFS (Depth-First Search) with massive branches pruning based on a dictionary tri. We’ll first map the encoded message to a computer-understandable format, such as the following:

“#1#2#3#4#1#5#6#1#5#7#6#1#8#9#10#1#1#8#8#11#12#13#9#14”

The format #number maps to each distinct dancing man in the

note. To build a tri, we’ll use one of the available English dictionaries on

the web. We’ll build the tri by going to each word and adding to a hash-table all

the substrings of that word. Some substrings will be valid words. Others won’t,

but at least we’ll know that it is a prefix that can lead to a valid word. For

example, suppose that the word in the dictionary is “Love”. We’ll add the

following to the hash-table:

L à prefix

Lo à prefixLov à prefix

Love à word

That way, to prune down the search space, we always check to see

if the current partial word is either a valid prefix or a valid word. If none

of these conditions are met, there is no need to continue the search thru that path

of the tree.

With the tri built, the DFS is a standard one where we keep

track of all the mappings and make use of some minor optimizations along the

way. When you run the code, which is down below, there will be a number of

different and valid mappings for the encoded message, such as: “ear be check he

to feet tv is on”. Non-sense, and most of the decoded strings will be like

that. Hence, there is a need for a post-process of all the decoded strings

generated to really find the real one. It turns out that looking for names of

people in the messages filters them down to just a few, and some of them stand

out, such as:

elsie prepare to meet

thy box

elsie prepare to meet

thy dogelsie prepare to meet thy god

elsie prepare to meet thy job

In particular the third one. Indeed, the message was a

reference to the next victim, Elsie. Police was able to setup a trap and caught

the killer. The detective who decrypted the message was Sherlock Holmes. Took

him less than 5min. He used frequency analysis and the fact that the flags are actually word breakers... And here is the code:

Program.cs

using

System;

using

System.Collections.Generic;

using

System.Linq;

using

System.Text;

using

System.IO;

using

System.Collections;

namespace TheDancingMen

{

class Program

{

static void Main(string[]

args)

{

//Reading

data and preparing the tri

if

(args.Length != 2)

{

Console.WriteLine("Usage: TheDancingMen.exe <dictionary text

file> <encoded message text file>");

return;

}

string

dictionaryFile = args[0];

Util.BuildTriFromDictionary(dictionaryFile);

string

encodedMessageFile = args[1];

FileInfo

fiEncodedMessageFile = new FileInfo(encodedMessageFile);

StreamReader

srEncodedMessageFile = fiEncodedMessageFile.OpenText();

string

encodedMessage = srEncodedMessageFile.ReadToEnd();

if

(srEncodedMessageFile != null)

{

srEncodedMessageFile.Close();

}

//Main

logic

string[]

encodedMessageParts = encodedMessage.Split(new char[] { '#' }, StringSplitOptions.RemoveEmptyEntries);

Hashtable

htLettersUsedForMapping = new Hashtable();

Hashtable

htEncodedCharToLetter = new Hashtable();

Hashtable

htPhrasesUsed = new Hashtable();

Process(encodedMessageParts,

0,

"",

"",

htLettersUsedForMapping,

htEncodedCharToLetter,

htPhrasesUsed,

0);

}

static void Process(string[]

encodedMessageParts,

int currentIndex,

string currentDecodedWord,

string

currentDecodedPhrase,

Hashtable htLettersUsedForMapping,

Hashtable htEncodedCharToLetter,

Hashtable htPhrasesUsed,

int actualNumberOfCharsInDecodedMessage)

{

if

(currentIndex >= encodedMessageParts.Length)

{

if

(actualNumberOfCharsInDecodedMessage == encodedMessageParts.Length &&

!htPhrasesUsed.ContainsKey(currentDecodedPhrase))

{

htPhrasesUsed.Add(currentDecodedPhrase, true);

Console.WriteLine("Message: {0}", currentDecodedPhrase);

}

return;

}

if

(htEncodedCharToLetter.ContainsKey(encodedMessageParts[currentIndex]))

{

string

newDecodedWord = currentDecodedWord + ((char)htEncodedCharToLetter[encodedMessageParts[currentIndex]]).ToString();

if

(Util.htWordsTri.ContainsKey(newDecodedWord))

{

if

((bool)Util.htWordsTri[newDecodedWord]

&&

newDecodedWord.Length

> 1) //Valid word found

{

Process(encodedMessageParts,

currentIndex +

1,

"",

currentDecodedPhrase + " "

+ newDecodedWord,

htLettersUsedForMapping,

htEncodedCharToLetter,

htPhrasesUsed,

actualNumberOfCharsInDecodedMessage + newDecodedWord.Length);

}

//And

in both cases (valid word or not), since the partial word is in the tri,

proceed

Process(encodedMessageParts,

currentIndex + 1,

newDecodedWord,

currentDecodedPhrase,

htLettersUsedForMapping,

htEncodedCharToLetter,

htPhrasesUsed,

actualNumberOfCharsInDecodedMessage);

}

}

else

{

for(char letter = 'a';letter

<= 'z';letter++)

{

if

(!htLettersUsedForMapping.ContainsKey(letter))

{

htLettersUsedForMapping.Add(letter,

true);

htEncodedCharToLetter.Add(encodedMessageParts[currentIndex], letter);

string newDecodedWord = currentDecodedWord + letter.ToString();

if (Util.htWordsTri.ContainsKey(newDecodedWord))

{

if ((bool)Util.htWordsTri[newDecodedWord] &&

newDecodedWord.Length > 1) //Valid word

found

{

Process(encodedMessageParts,

currentIndex + 1,

"",

currentDecodedPhrase + " "

+ newDecodedWord,

htLettersUsedForMapping,

htEncodedCharToLetter,

htPhrasesUsed,

actualNumberOfCharsInDecodedMessage + newDecodedWord.Length);

}

//And in both cases (valid word or not), since the partial

word is in the tri, proceed

Process(encodedMessageParts,

currentIndex + 1,

newDecodedWord,

currentDecodedPhrase,

htLettersUsedForMapping,

htEncodedCharToLetter,

htPhrasesUsed,

actualNumberOfCharsInDecodedMessage);

}

htEncodedCharToLetter.Remove(encodedMessageParts[currentIndex]);

htLettersUsedForMapping.Remove(letter);

}

}

}

}

}

}

Util.cs

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.IO;

using System.Collections;

namespace

TheDancingMen

{class Util

{

public static Hashtable htWordsTri = null;

public static int maxWordLength = 0;

public static void

BuildTriFromDictionary(string dictionaryFile)

{if (String.IsNullOrEmpty(dictionaryFile) ||

!File.Exists(dictionaryFile))

{

Console.WriteLine("Unable to read dictionary file: '{0}'. Please check that the file exists.", dictionaryFile);

return;

}

FileInfo

fiDictionary = new FileInfo(dictionaryFile);

StreamReader

srDictionary = fiDictionary.OpenText();htWordsTri = new Hashtable();

Random rd = new Random();

int numberOfWords = 0;

while

(!srDictionary.EndOfStream)

{string word = srDictionary.ReadLine().ToLower().Trim();

if(word.Length

> maxWordLength)

{maxWordLength = word.Length;

}

//Add

it to the tri

for

(int i = 1; i <= word.Length; i++){

string triEntry = word.Substring(0, i);

if

(htWordsTri.ContainsKey(triEntry))

{if (i == word.Length)

{

htWordsTri[triEntry] = true;

}

}

else

{

htWordsTri.Add(triEntry, i == word.Length);

}

}

numberOfWords++;

if

(rd.Next(0, 10000) < 1) //Probability: 1/10000 *

100%

{Console.WriteLine("Processed: {0} words, {1} tri entries", numberOfWords, htWordsTri.Count);

}

}

Console.WriteLine("Total: {0} words, {1} tri entries", numberOfWords, htWordsTri.Count);

if

(srDictionary != null)

{srDictionary.Close();

}

}

}

}

Comments

Post a Comment